7 Group Voting Methods For Ranking Any List of Ideas

Ranking exercises are an ideal way to shortlist ideas, identify top suggestions, and give your colleagues a voice in the decision-making process.

I’ve helped teams run ranking projects with millions of votes cast in total, so I’ve learned a thing or two about the most common mistakes to avoid! While ranking surveys sound simple in theory, a poorly run exercise risks undermining your entire project or sparking disputes and grudges amongst teammates that can be difficult to resolve.

This post covers (1) explanations of the eight most popular ranking methods, and (2) tips on which methods are best suited to specific scenarios, and (3) best practices for designing ranking exercises. Let’s dive in!

7 Methods for Ranking Your Team’s Ideas

1. Pairwise Ranking

My favorite voting format for ranking exercises is pairwise ranking, which involves breaking up a list of options into a series of head-to-head pair votes. Pairwise ranking is ideal for almost any scenario, whether you’re group voting or just trying to rank your own personal preferences, and works for long lists of 10+ options as well as short ranking sets.

Unlike other ranking methods, pairwise ranking surfaces people’s implicit preferences. When it comes to voting on ideas, it can be a lot of mental work to figure out what your own preferences are. By breaking the list down into a series of “A vs B” decisions, pairwise ranking gives respondents a really easy way to share their personal preferences.

You can create free pairwise ranking surveys on a tool like OpinionX, which automatically picks the head-to-head pairs and calculates all your results on a simple 0-100 scale. You can even set how many pairs you want each participant to vote on.

— — —

2. Ranked Choice Voting

Rank order questions are the most common type of ranking exercise that people are used to doing online. It involves respondents dragging and dropping a list of options into their desired order before submitting their final list of personal preferences. However, one thing to note is that most survey providers recommend only using rank order questions for lists of up to 5-10 options at a maximum. For longer lists, pairwise ranking is a better solution.

— — —

3. Points Allocation

Points allocation questions (also known as Constant Sum) ask respondents to allocate a budget of points amongst a set of options according to their personal preferences. Points-based ranking allows participants to signify the magnitude of their preferences, not just the ranked order. For example, we don’t just learn that Simon prefers apples to bananas, we see that he would give 9 of his 10 points to apples.

Points allocation is like the opposite of pairwise ranking — the ranked results it creates are based on participants’ explicit preferences (their awareness of what they like). This means it is well suited to decisions that also have explicit rationality to them, like purchase motivators, rather than situations that draw on more implicit criteria, like idea ranking.

— — —

4. MaxDiff Analysis (Best/Worst Scaling)

MaxDiff Analysis ranks people’s preferences by asking them to choose the best and worst option from a group of 3-6 statements. Each time the respondent votes, a new set of statements from the overall list of ranking options is shown. The “best” and “worst” labels in a MaxDiff survey can be changed to suit your research occasion, such as Favorite / Least Favorite, Top / Bottom, or Most Preferred / Least Preferred.

— — —

5. Dot Voting

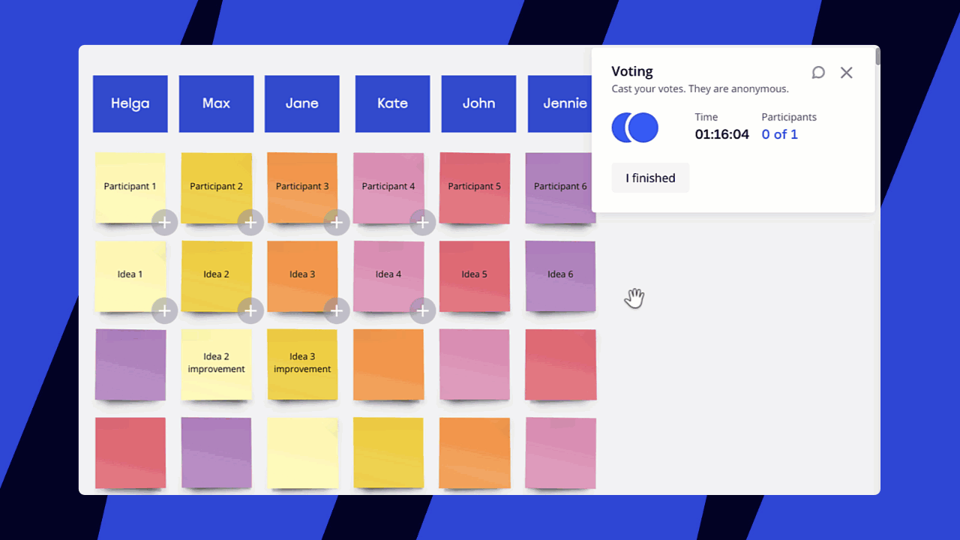

Dot voting is like the traditional version of the points allocation method. It assigns each participant a number of “dots” for them to distribute amongst a set of options, either on a virtual whiteboard space or using in-person posters and stickies — hence why it’s often used for focus groups. While it offers a quick and experiential method for participants, it often requires manual tallying and doesn’t allow any additional research or questions to be asked.

— — —

6. Image Voting

Mockups, prototype sketches, and visual drawings transmit the core message behind an idea faster than text or speech alone. Allow team members to submit simple images that represent their ideas and use pairwise ranking to turn those images into ranked results.

— — —

7. Consensus Ranking

Sometimes, it’s more important to find consensus perspectives than simply the highest-voted idea. Agreement ranking — showing participants a statement and asking them to agree/disagree — is a well-studied mechanism used in many public consultation projects (eg. the vTaiwan participatory democracy project).

— — —

3 Specific Scenarios To Consider

1. Respondent Device

As of 2022, over 56% of all surveys are now answered on mobile devices, but our small phone screens are pretty terrible for most ranking questions (especially for ranking images). Pairwise ranking is particularly well-suited to mobile device respondents because it only shows two options at a time.

2. List Length

The most common ranking format (drag-and-drop rank ordering) is really bad for ranking long lists. SurveyMonkey says not to use it for more than 5 options, Qualtrics says 6-10 max, and Slido vaguely says “less is more”. A 2016 study found rank order questions become increasingly error-prone and time-consuming as more options are added. If you’re ranking 10 or more options, you really should switch from rank order to pairwise ranking.

3. Multiple Teams / Groups

If you’re engaging multiple teams, groups, cohorts, or segments with one survey, there are several things to consider. First, ensure you collect data in your survey that helps you identify which group each respondent belongs to (pretty easily done via multiple-choice questions). This data can be used to segment and compare ranked results for each segment of respondents.

In more extreme cases, you might need to customize your ranking list for each team. In these cases, you can use survey branching to send respondents to different ranking questions based on their answers to multiple-choice questions.

^ quick example that shows why it’s very important to consider segmentation when analyzing any ranked results

Picking the right tool for your idea ranking exercise

Every ranking method described on this post (excluding MaxDiff) is available for free on OpinionX — a free research tool for ranking people’s priorities. OpinionX is used by thousands of teams from companies that include Google, Microsoft, Amazon, Shopify, Spotify and more.

Create and launch your own ranking exercise on OpinionX in less than 5 minutes. You don’t need any credit card, there’s no time limit, and you can create as many surveys / questions / ranking options as you’d like on the free tier.

— — —

Avoid common pitfalls with these best practice principles

1. Comparative

The best kind of ranking is comparative. It doesn’t just ask people to individually rate each option from 1-5 stars; it forces them to compare options and decide which are most important to them. All the ranking methods (excluding method 7) are comparative formats.

Avoid: Rating methods like 1-5 Stars or Likert Scales.

Adopt: Use comparative methods like Pairwise Ranking.

2. Crowdsourced

The best ranking exercises start well before any votes are cast. To create meaningful participation, allow your team to share their ideas, contribute towards the list of options, and shape the overall discussion.

Avoid: Shallow or performative participation.

Adopt: Crowdsourced ranking lists.

3. Countable

With any research or engagement project, it’s important to consider what kind of output you’ll need for your follow-on decisions or actions. In almost all cases, the best output from a ranking exercise is a numeric, easy-to-understand dataset.

Avoid: Vague, unactionable, or purely qualitative outcomes.

Adopt: Quantitative or mixed methods ranking.

4. Collective

You’re likely giving your team a vote in your ranking exercise because you want to create a process that fosters buy-in and ownership of the outcome. The easiest way to ruin that is to give unequal influence to different participants. There are great ways to isolate or compare different segments of your respondents while keeping things fair (eg. segmentation filters during results analysis).

Avoid: Exclusive or unequal participation.

Adopt: One person = one vote.