Using Surveys for A/B Testing [Methods, Examples, Tools]

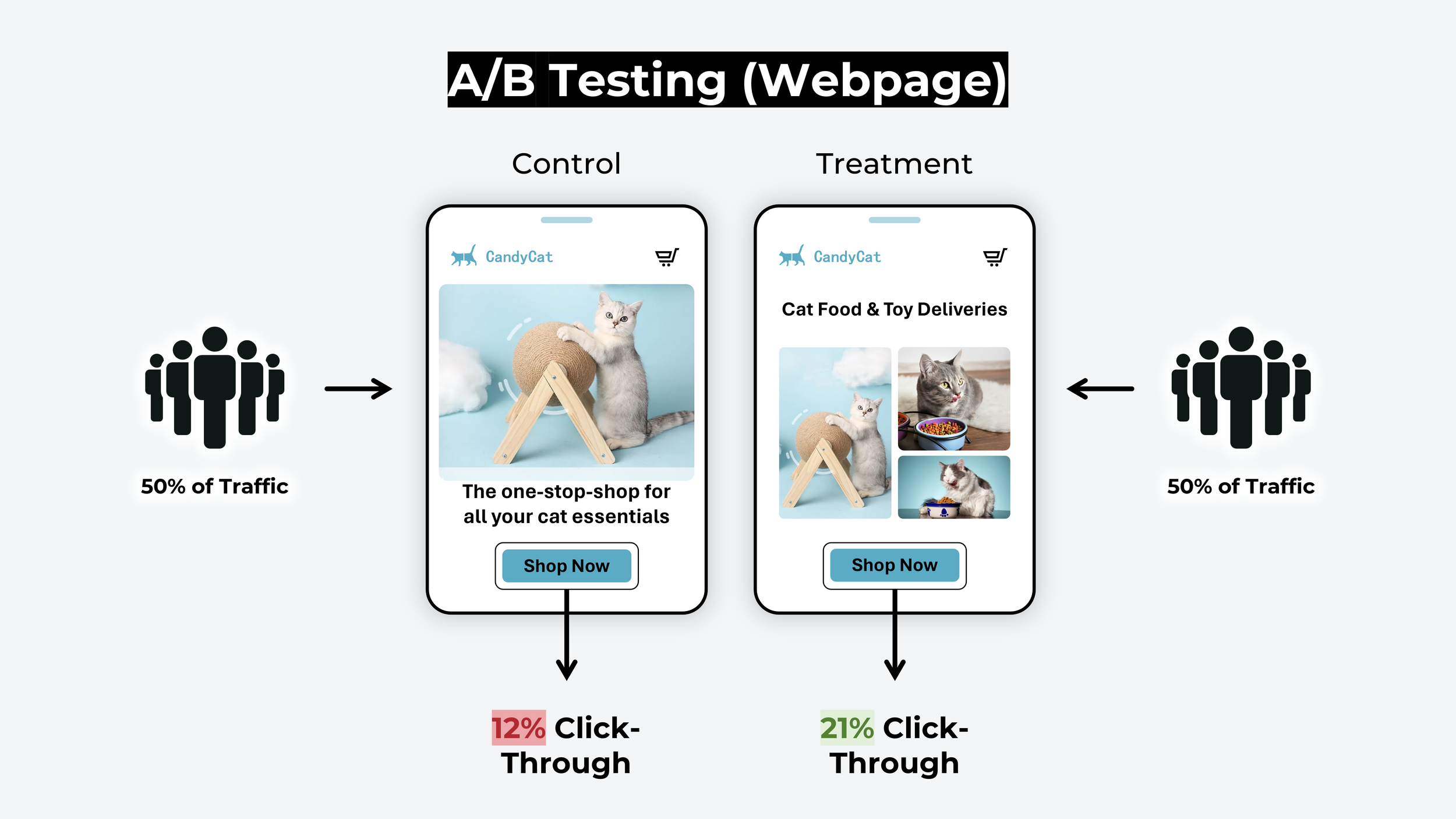

Most people assume that A/B testing means running an experiment that compares the performance of two similar versions of a webpage. But that’s just one type an A/B test — there are a bunch of ways you can create an A/B test, including with online surveys.

In fact, online surveys are a much faster and cheaper way to run A/B tests! In this blog post, I explain how to use online surveys to run A/B experiments on things like images, ideas, ad copy, sales messaging, problem statements, and more:

What is A/B testing?

An A/B test is an experiment that compares two or more versions of something (like a webpage, advertisement, or message) to figure out which one performs better. That generally involves splitting people into groups who each see a different version and then measuring which version produces the most clicks or customers.

One of the biggest challenges with running an A/B test is that you need a LOT of people to participate in order to generate statistically valid results. Ronny Kohavi (former Vice President of Airbnb, Microsoft and Amazon) says that “unless you have at least tens of thousands of users for an A/B test, the statistics just don't work out. A retail site trying to detect changes will need something like 200,000 users”.

Well, that’s disappointing… Most of us don’t have 200,000 people waiting around for an experiment! The good news is, there are plenty of ways you can use the principles of A/B testing with much smaller sample sizes when comparing things like design mockups, concept images, ad copy, problem statements, sales messaging, and loads more.

So what would a survey-based A/B test look like?

How to run A/B tests using online surveys

There are two ways you can conduct A/B tests using online surveys:

1. Comparative Preference Testing

Regardless of which you choose, it’s essential that your analysis also includes:

Let’s break these three topics down in more detail…

1. Comparative Preference Testing

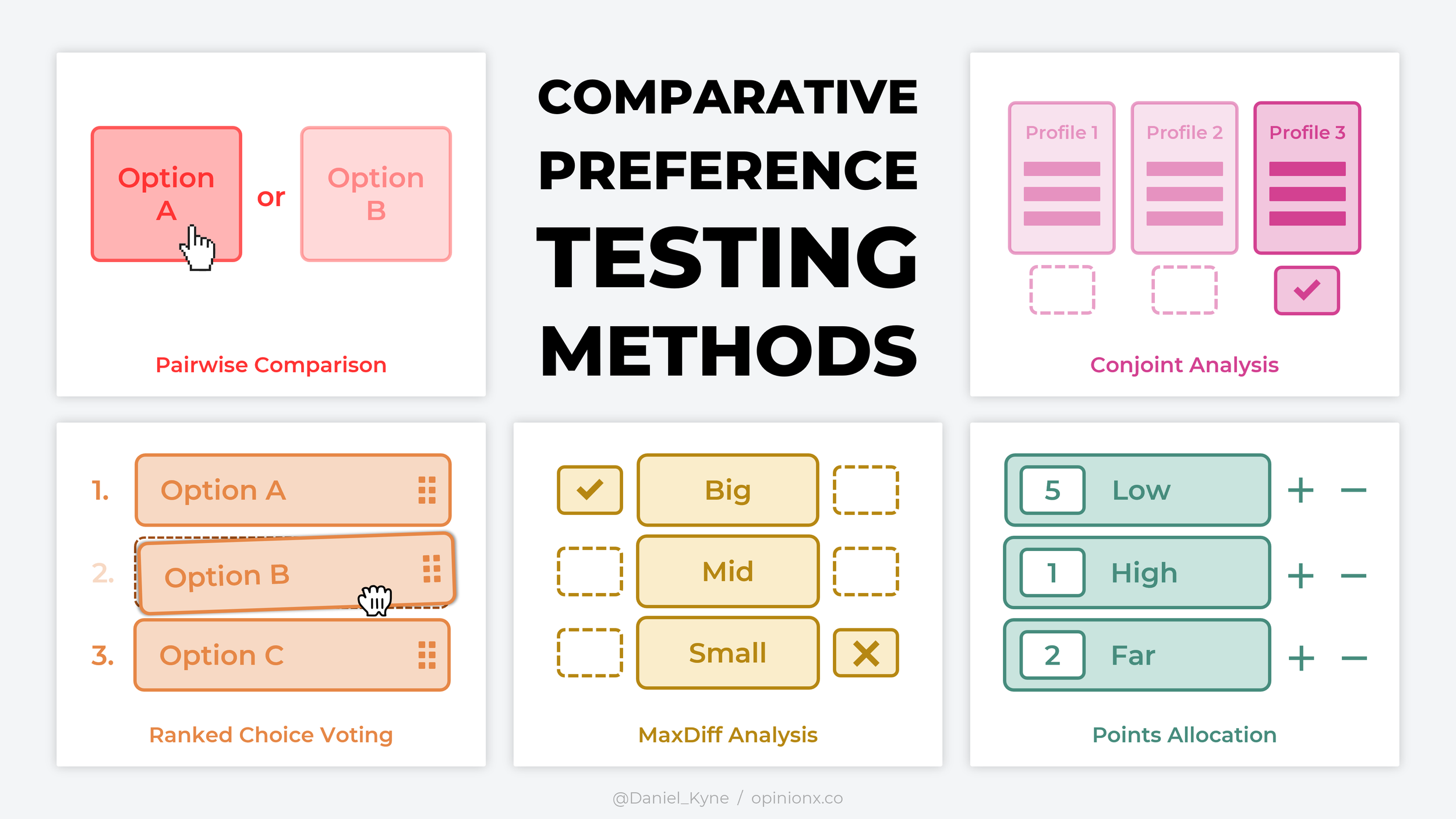

Comparative Preference Testing (also known as discrete choice analysis) is a research technique that forces people to compare options head-to-head and analyzes their votes to calculate which options they like or dislike most.

Question formats that use forced comparison and are suitable for A/B testing surveys include:

Pairwise Comparison — shows two options at a time in a series of head-to-head votes.

Points Allocation — distribute a pool of points according to your personal preferences.

Ranked Choice Voting — rank the list of options in order based on your personal preferences.

MaxDiff Analysis — pick the best and worst options from a list of 3-6 choices.

Conjoint Analysis — pick the best ‘profile’ (made up of a series of category-based variables) to identify the most important category and the ranked order of variables within each category.

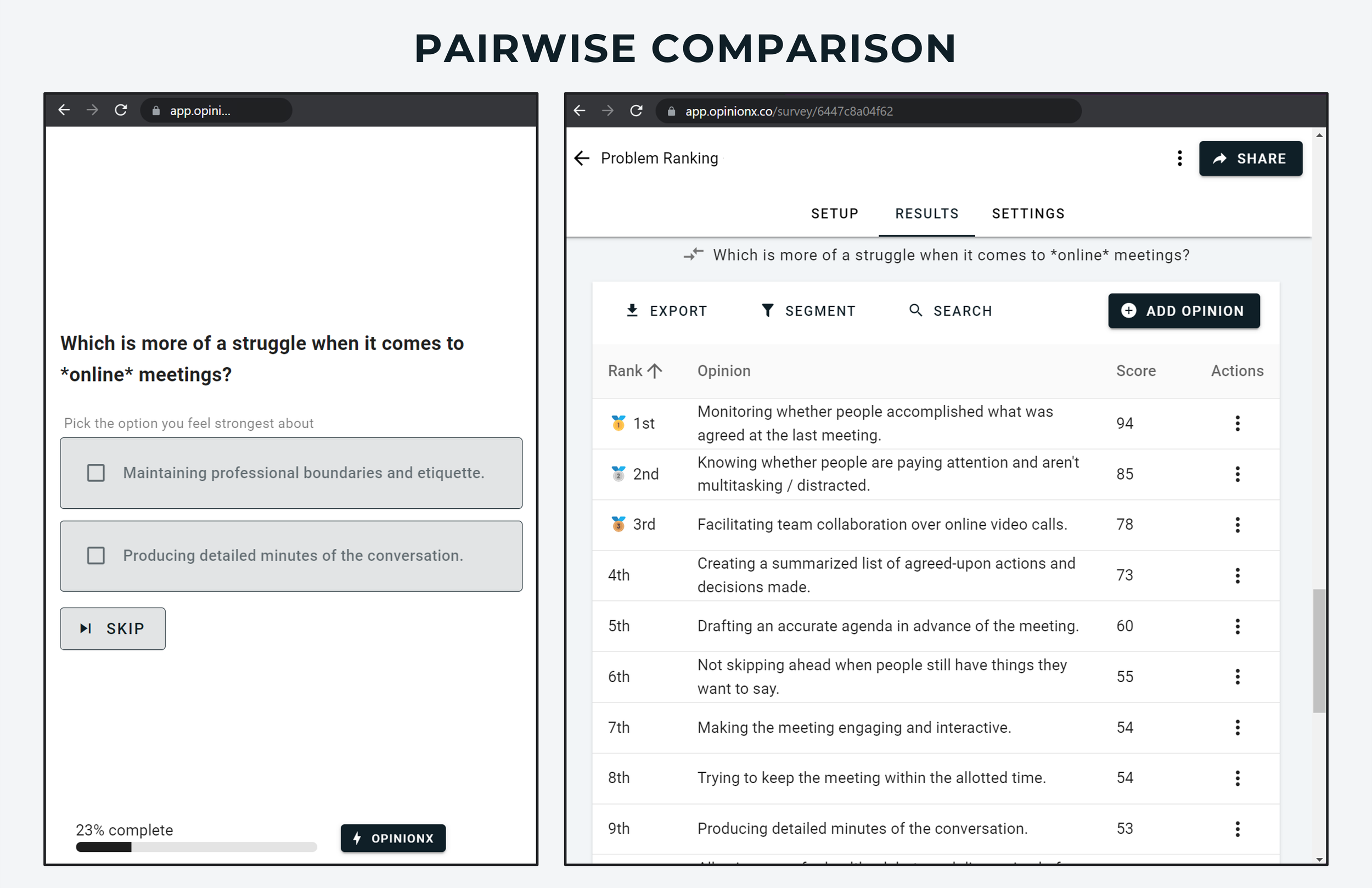

Pairwise comparison is a simple question format that shows a person two options at a time and asks them to pick the one they feel strongest for (whether that’s the most preferred, most urgent, most disliked, etc — you set the context for comparison). Each time the participant chooses one option from the pair, two new options appear in their place. The choices they make are used to calculate the “win rate” for each option — ie. how many times was this option picked out of all the pairs it appeared in.

The biggest benefit of a comparative ranking method like pairwise comparison is that works the same way that people make decisions in real life, by comparing the options available and choosing the one that most appeals to their current needs, pains or desires.

In pairwise comparison, the required number of respondents is WAY less than a website A/B test. For example, if I have 20 options to rank and each respondent will be shown 20 pairs of options to vote on, then I only need a minimum of 29 respondents to meet my minimum sample size requirements (a detailed explanation of this calculation can be found here).

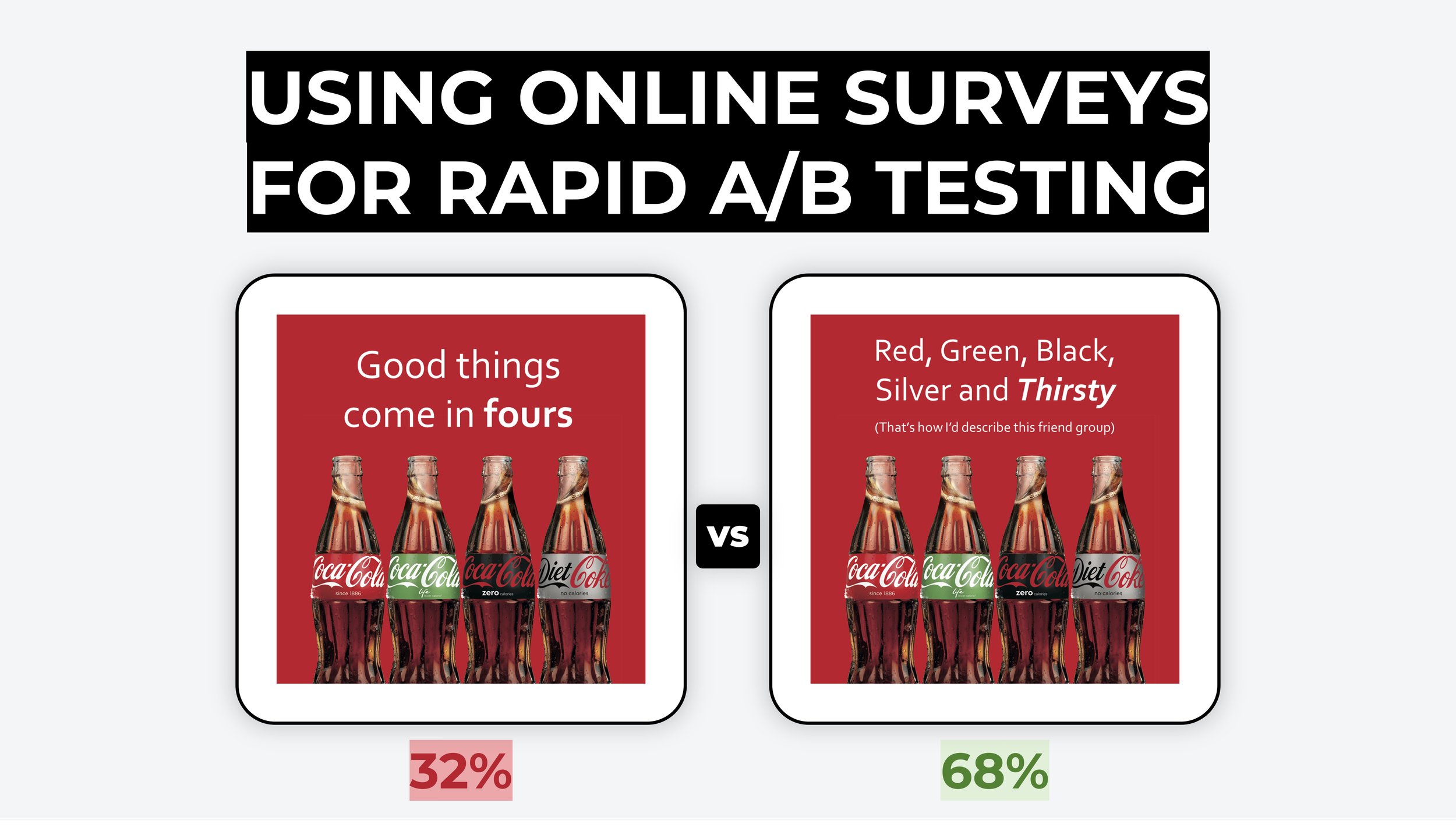

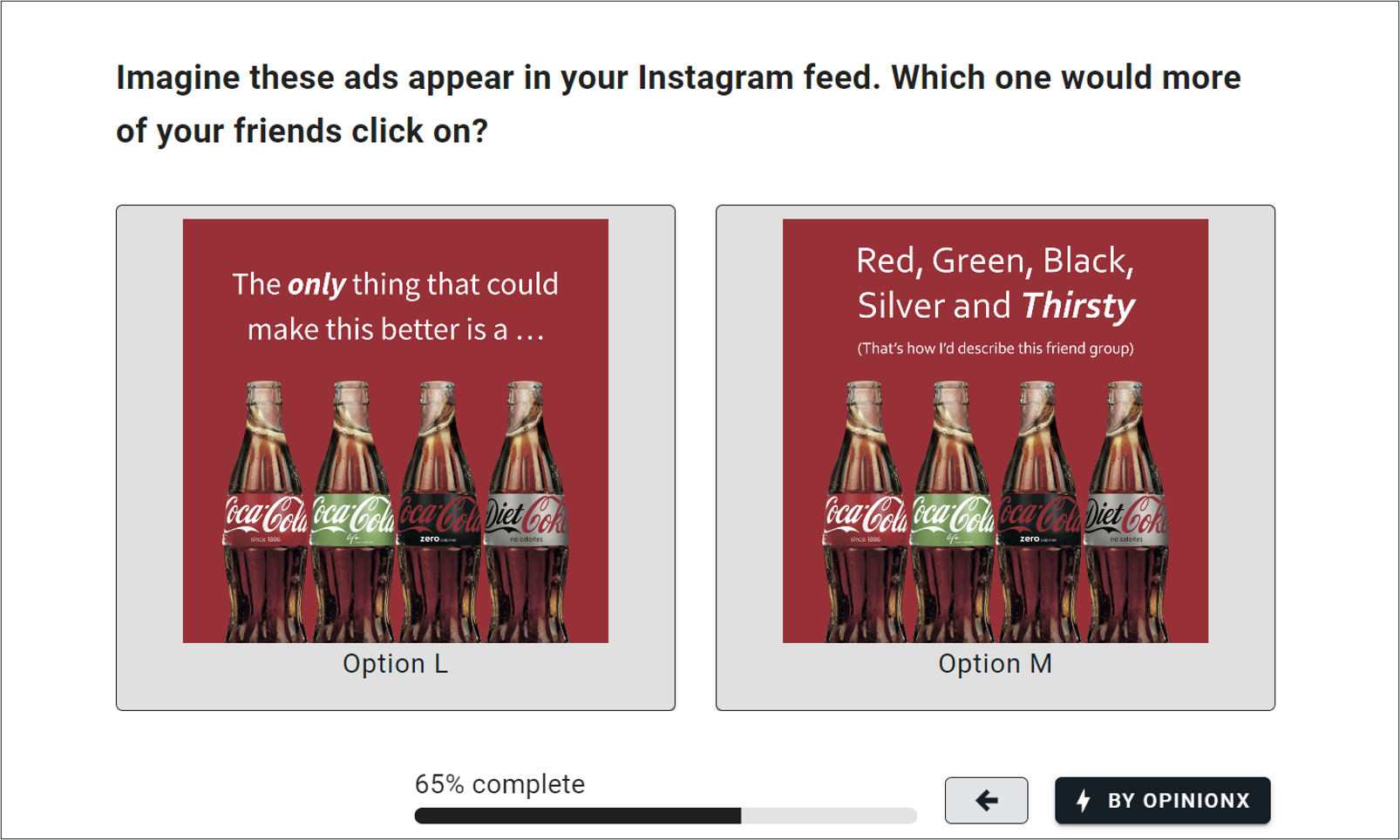

So, how can pairwise comparison be used for an A/B test? Well, an A/B test has two important components — controlled variables and a behavioral scenario. Controlled variables are your list of similar options (like ten identical images for an Instagram ad that each have a different text caption). The “behavioral scenario” is the context that the experiment participant considers when viewing the options (eg. casually scrolling through your Instagram feed).

These two aspects work perfectly for a pairwise comparison survey. We include the “behavioral scenario” in the pairwise question and the “controlled variables” are the list of options we include for ranking. Here’s an example:

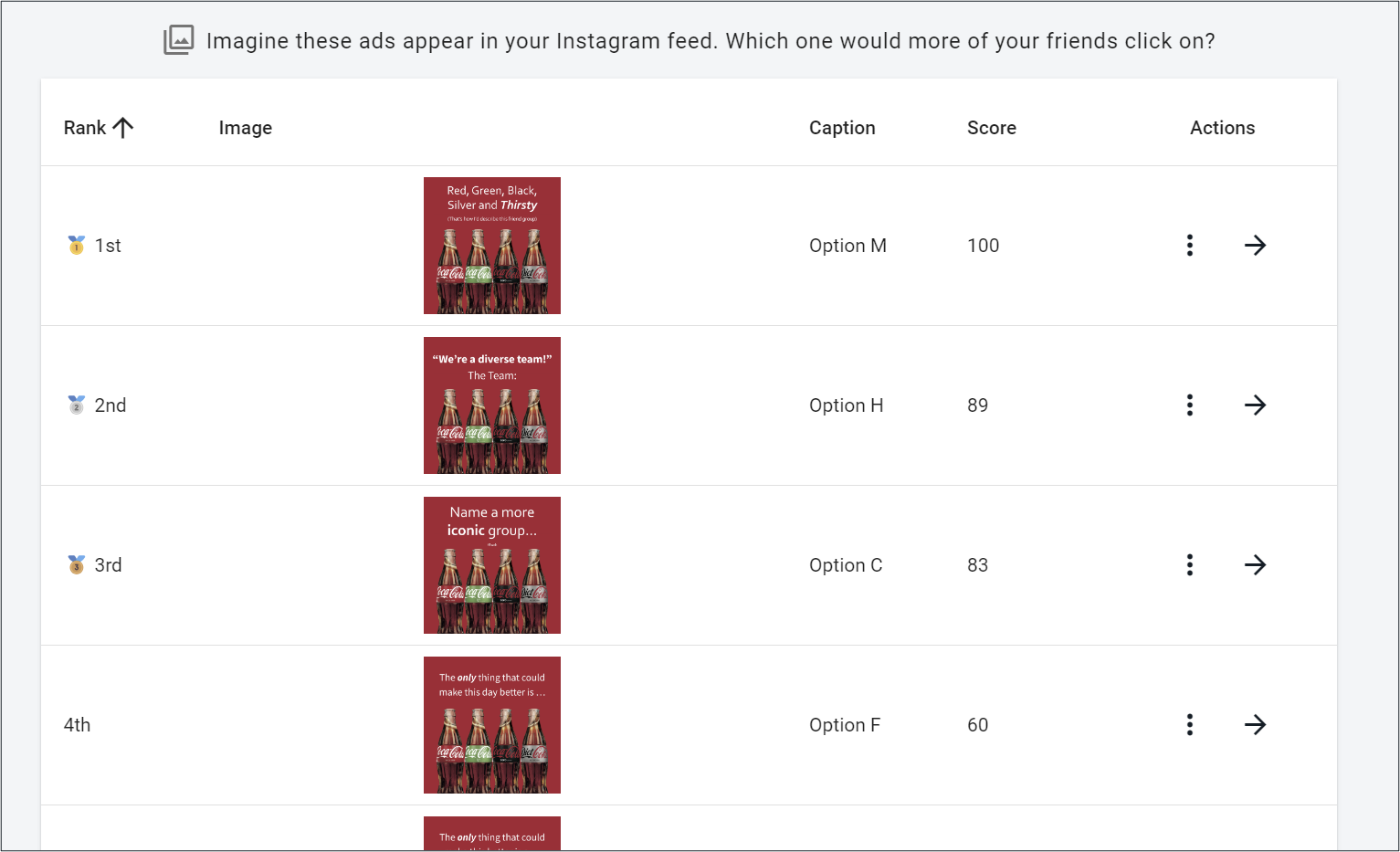

After participants have voted, we rank the options from 1st to last based on their “win rate” score. For example, in the screenshot below, “Option H” has a score of 89, which means it appeared in 9 pairs and was picked as the better option 8 times (ie. 8/9 = 89%).

These screenshots of pairwise comparison voting and results were both taken from OpinionX, which is a free ranking survey tool that offers a bunch of different comparison-based ranking formats.

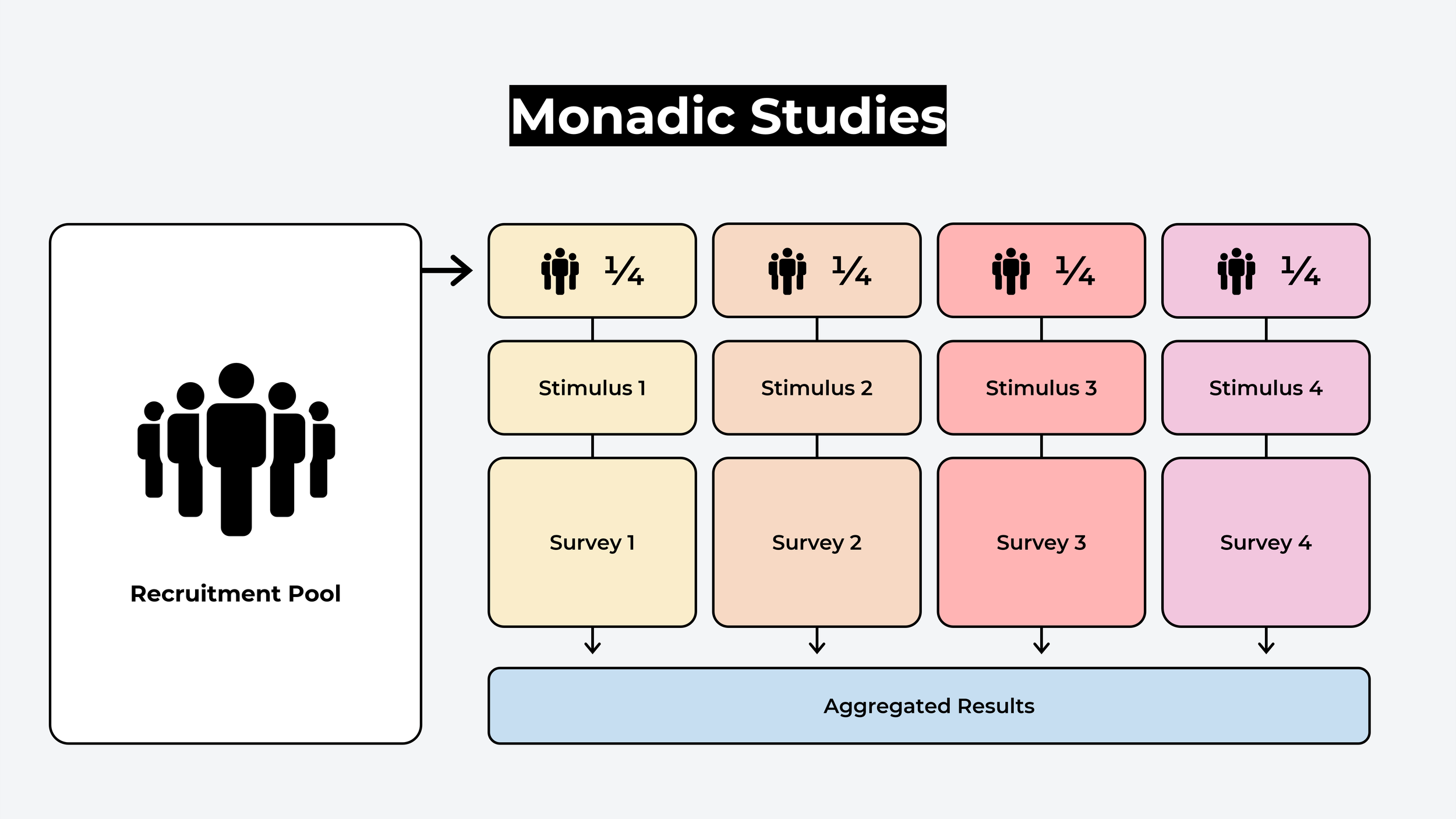

2. Monadic Studies

Monadic studies are like the opposite of a comparative preference test. Rather than show options together, you completely isolate them by only showing one option at a time. Every option is assigned its own survey (each with an identical list of questions) which gets sent to a separate group of respondents. At the end, the results of all the surveys are aggregated and compared.

Unlike preference testing, monadic studies can’t compare the main options against each other directly, so they generally rely on non-comparative question types such as:

Likert scales → eg. “rate this mockup on a 5-point scale from disinterested to interested.”

Open-ended questions → eg. “what do you like most about the design of this product?”

Semantic differential scales → eg. “rate this advert on a 5-point scale from budget to luxury.”

Brand perception → eg. "how would you describe the brand personality of this brand?”

Price sensitivity → eg. "at what price point would you consider this product too expensive?”

Recommendation → eg. “how likely are you to recommend [product] to a friend or colleague?”

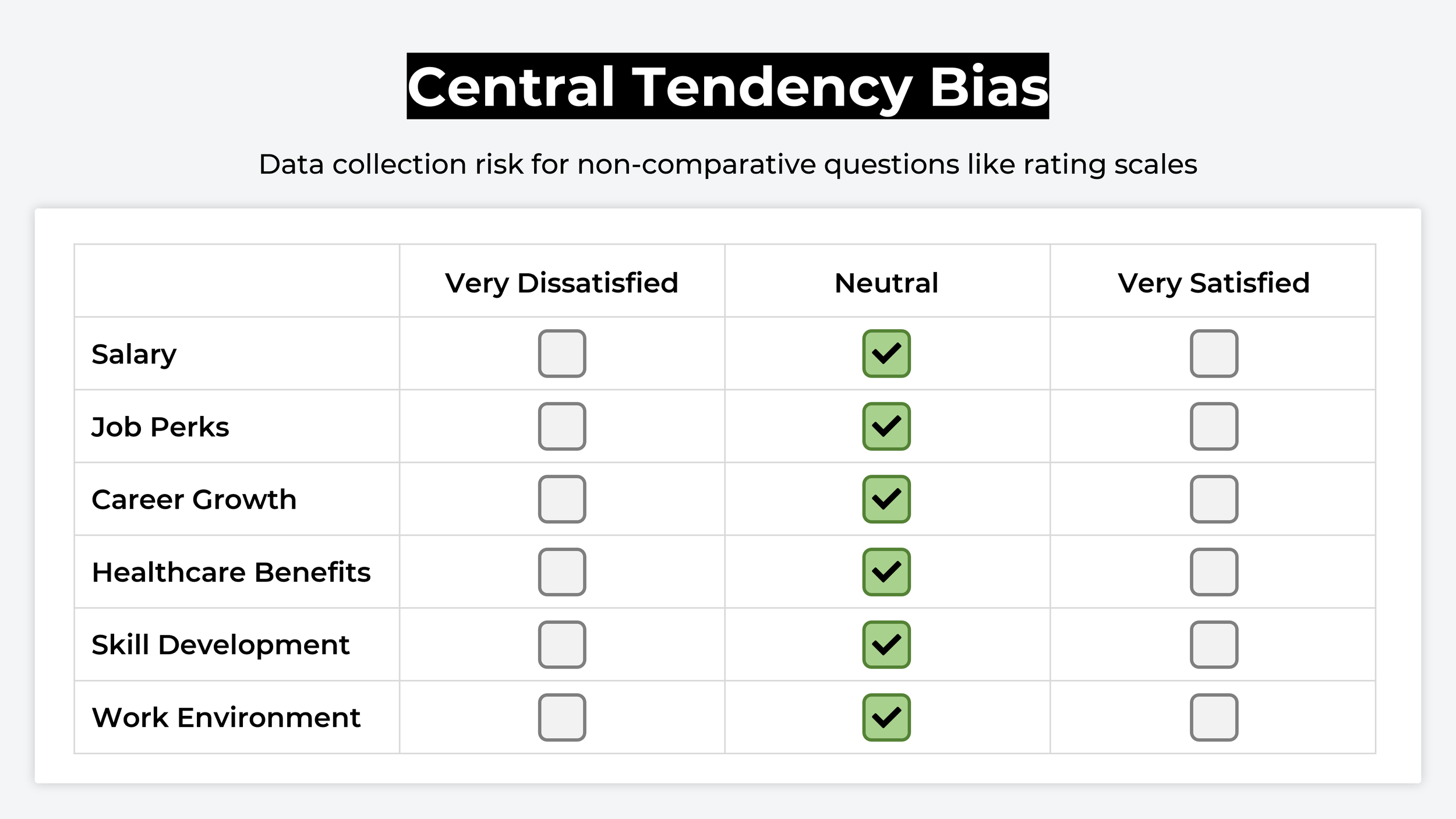

The challenge with these non-comparative question formats is “central tendency bias”; an increased chance that participants give everything the same score, like rating everything “neutral” or “3/5 stars”.

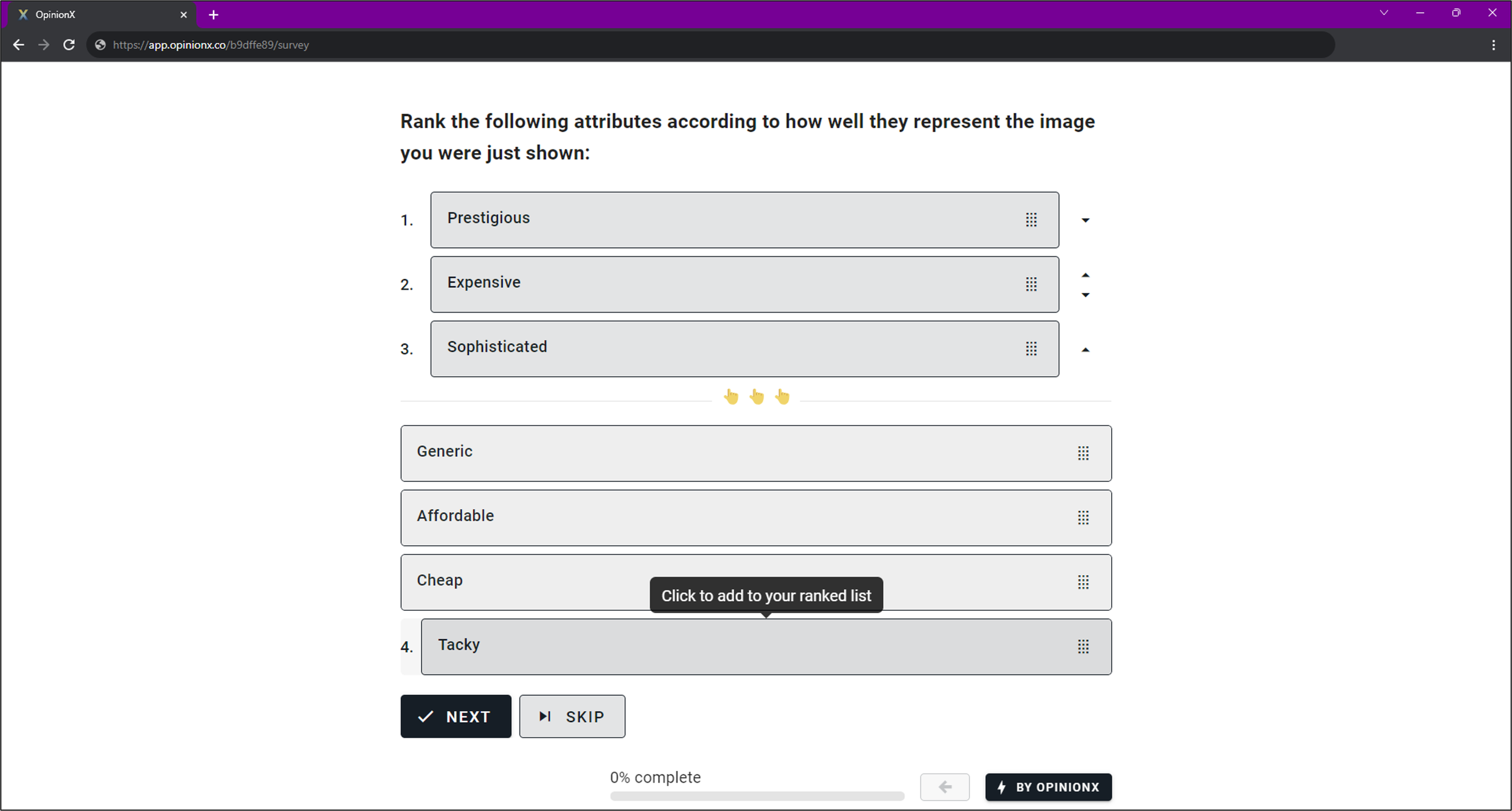

You can overcome central tendency bias by using comparison-based questions within your monadic studies. For example, a monadic study to understand consumer perception towards concept product designs could use a ranked choice voting question to show a list of brand attributes (like “luxurious”, “affordable”, “basic”) for participants to rank in relation to a concept image. This can help you identify which version of your product is most associated with your target attribute/description like “luxurious”.

Screenshot from a Ranked Choice Voting survey on OpinionX

3. Segmentation Analysis

Regardless of which A/B testing approach you decide to run — a single survey that uses comparative preference testing or a series of monadic studies — it is essential that you plan ahead for segmentation analysis afterwards.

What is segmentation analysis? It’s the process of filtering your results to only include participants that fall into a specific group of interest. For example, you could filter your results to only view data from female respondents or you could compare the difference between male vs female respondents.

Segmentation is essential because representative samples lead to vague or average results. You don’t want to know which option “people” pick more often — you need to know which option your most-ideal best-fit customers flock to. Here’s an example of how segmentation can flip your results, showing how a low-performing option jumps to the best-performing one when viewed through the right segment:

How do you do segmentation analysis on an A/B testing survey? You need participant profile data — information about who each person in your survey is. The easiest way to do this is to ask multiple choice questions in your survey that allow people to self-identify which segments they belong to. Even better would be to have this data on participants already so that you can import and enrich your survey results with this demographic, firmographic, and financial data.

Some survey tools come with purpose-built segmentation analysis features. For example, OpinionX has a bunch of segmentation features that range from simple one-click filtering to advanced visualizations of every possible segment based on your participant data.

Illustrated example of segmentation analysis on OpinionX

Alternatively, you can segment your results manually by downloading an aggregated spreadsheet of results from your survey provider that is oriented around participant profiles (here’s an example of how that looks on OpinionX). This will allow you to filter your data manually on Excel or Google Sheets to calculate the results for each segment.

Conclusion

A/B testing is a popular type of experiment because it provides actionable data about people’s behavior and how that translates to business outcomes. But sample size requirements often limit website A/B testing to large companies — that doesn’t need to be the case! Using comparative preference testing methods like pairwise comparison or monadic studies will let you leverage the principles of A/B testing in much more flexible ways so that you can identify your best-performing image, feature idea, ad copy, sales messaging, problem statement, and more.

OpinionX is a free research tool for creating comparative ranking surveys that’s used by thousands of teams from companies like Disney, LinkedIn and Shopify. It comes with a range of question formats and one-click segmentation analysis to help you get advanced insights without needing to be an expert data scientist or researcher.

Create your own free A/B test today at app.opinionx.co